Section 10.1: The Language of Hypothesis Testing

Objectives

By the end of this lesson, you will be able to...

- determine the null and alternative hypotheses from a claim

- explain Type I and Type II errors

- identify whether an error is Type I or Type II

- state conclusions to hypothesis tests

For a quick overview of this section, watch this short video summary:

The Nature of Hypothesis Testing

According to the US Census Bureau, 64% of US citizens age 18 or older voted in the 2004 election. Suppose we believe that percentage is higher for ECC students for the 2008 presidential election. To determine if our suspicions are correct, we collect information from a random sample of 500 ECC students. Of those, 460 were citizens and 18 or older in time for the election. (We have some students still in high school, and some who do not yet have citizenship.) Of those who were eligible to vote, 326 (or about 71%) say that they did vote.

Problem: Based on this random sample, do we have enough evidence to say that the percentage of ECC students who were eligible to vote and did vote in the 2008 presidential election was higher than the proportion of US citizens who voted in the 2004 election?

Solution: 71% of the students in our sample voted. Obviously, this is higher than the national average. The thing to consider, though, is that this is just a sample of ECC students - it isn't every student. It's possible that the students who just happened to be in our sample were those who voted. Maybe this could just happen randomly. In order to determine if it really is that different from the national proportion, we need to find out how probable a sample proportion of 71% would be if the true proportion was really 64%.

To answer this, we consider the distribution of the proportion of eligible voters who did vote. From Section 8.2, we know that this proportion is approximately normally distributed if np(1-p)≥10. Using the techniques from that section, we also know that the mean would be 64%, with a standard deviation of about 2.2%. With this information, the probability of observing a random sample with a proportion of 71% if the true proportion is 64% is about 0.001.

This means that about 1 in 1000 random samples will have a proportion that large. So we have two conclusions:

- We just observed an extremely rare event, or

- The proportion of ECC students who vote is actually higher than 64%.

This is the idea behind hypothesis testing. The general process is this:

Steps in Hypothesis Testing

- A claim is made. (More than 64% of ECC students vote, in our case.)

- Evidence is collected to test the claim. (We found that 326 of 460 voted.)

- The data are analyzed to assess the plausibility of the claim. (We determined that the proportion is most likely higher than 64% for ECC students.)

Null and Alternative Hypotheses

In statistics, we call these claims hypotheses. We have two types of hypotheses, a null hypothesis and an alternative hypothesis.

The null hypothesis, denoted H0 ("H-naught"), is a statement to be tested. It is usually the status quo, and is assumed true until evidence is found otherwise.

The alternative hypothesis, denoted H1 ("H-one"), is a claim to be tested. We will try to find evidence to support the alternative hypothesis.

There are three general ways in this chapter that we'll set up the null and alternative hypothesis.

- two tailed

H0: parameter = some value

H1: parameter ≠ the value - left-tailed

H0: parameter = some value

H1: parameter < the value - right-tailed

H0: parameter = some value

H1: parameter > the value

Let's look an example of each.

Example 1

In the introduction of this section, we were considering the proportion of ECC students who voted in the 2008 presidential election. We assumed that it was the same as the national proportion in 2004 (64%), and tried to find evidence that it was higher than that. In that case, our null and alternative hypotheses would be:

H0: p = 0.64

H1: p > 0.64

Example 2

According to the Elgin Community College website, the average age of ECC students is 28.2 years. We might claim that the average is less for online Mth120 students. In that case, our null and alternative hypotheses would be:

H0: μ = 28

H1: μ < 28

Example 3

It's fairly standard knowledge that IQ tests are designed to be normally distributed, with an average of 100. We wonder whether the IQ of ECC students is different from this average. Our hypotheses would then be:

H0: μ = 100

H1: μ ≠ 100

The Four Outcomes of Hypothesis Testing

Unfortunately, we never know with 100% certainty what is true in reality. We always make our decision based on sample data, which may or may not reflect reality. So we'll make our decision, but it may not always be correct.

In general, there are four possible outcomes from a hypothesis test when we compare our decision with what is true in reality - which we will never know!

- We could decide to not reject the null hypothesis when in reality the null hypothesis was true. This would be a correct decision.

- We could reject the null hypothesis when in reality the alternative hypothesis is true. This would also be a correct decision.

- We could reject the null hypothesis when it really is true. We call this error a Type I error.

- We could decide to not reject the null hypothesis, when in reality we should have, because the alternative was true. We call this error a Type II error.

| reality | |||

| H0 true | H1 true | ||

| decision | do not reject H0 |

correct decision |

Type II error |

| reject H0 | Type I error |

correct decision |

|

To help illustrate the idea, let's look at an example.

Example 4

Let's consider a pregnancy test. The tests work by looking for the presence of the hormone human chorionic gonadotropin (hCG), which is secreted by the placenta after the fertilized egg implants in a woman's uterus.

If we consider this in the language of a hypothesis test, the null hypothesis here is that the woman is not pregnant - this is what we assume is true until proven otherwise.

The corresponding chart for this test would look something like this:

| reality | |||

| not pregnant | pregnant | ||

| pregnancy test |

not pregnant |

correct decision |

Type II error |

| pregnant | Type I error |

correct decision |

|

In this case, we would call a Type I error a "false positive" - the test was positive for pregnancy, when in reality the mother was not pregnant.

The Type II error in this context would be a "false negative" - the test did not reveal the pregnancy, when the woman really was pregnant.

When a test claims that it is "99% Accurate at Detecting Pregnancies", it is referring to Type II errors. The tests claim to detect 99% of pregnancies, so it will make a Type II error (not detecting the pregnancy) only 1% of the time.

Note: The "99% Accurate" claim is not entirely correct. Many tests do not have this accuracy until a few days after a missed period. (Source:US Dept. of Health and Human Services)

Level of Significance

As notation, we assign two Greek letters, α ("alpha") and β ("beta"), to the probability of Type I and Type II errors, respectively.

α = P(Type I error) = P(rejecting H0 when H0 is true)

β = P(Type II error) = P(not rejecting H0 when H1 is true)

Unfortunately, we can't control both errors, so researchers choose the probability of making a Type I error before the sample is collected. We refer to this probability as the level of significance of the test.

Choosing α, the Probability of a Type I Error

One interesting topic is the choice of α. How do we choose? The answer to that is to consider the consequences of the mistake. Consider two examples:

Example 5

Let's consider again a murder trial, with a possibility death penalty if the defendant is convicted. The null and alternative hypotheses again are:

H0: the defendant is innocent

H1: the defendant is guilty

In this case, a Type I error (rejecting the null when it really is true) would be when the jury returns a "guilty" verdict, when the defendant is actually innocent.

In our judicial system, we say that we must be sure "beyond a reasonable doubt". And in this case in particular, the consequences of a mistake would be the death of an innocent defendant.

Clearly in this case, we want the probability of making this error very small, so we might assign to α a value like 0.00001. (So about 1 out of every 100,000 such trials will result in an incorrect guilty verdict. You might choose an even smaller value - especially if you are the defendant in question!)

Example 6

As an alternative, suppose we're considering the average age of ECC students. As stated in Example 2, we assumed that average age is 28, but we think it might be lower for online Mth120 students.

H0: μ = 28

H1: μ < 28

In this example, α = P(concluding that the average age is less than 28, when it really is 28). The consequences of that mistake are... that we're wrong about the average age. Certainly nothing as dire as sending an innocent defendant to jail - or worse.

Because the consequences of a Type I error or not nearly as dire, we might assign a value of α = 0.05.

An Interesting Example of Type I and II Errors

Example 7

Let's look at a different example. Suppose we have a test for the deadly disease statisticitis, which affects approximately 5% of the general population.

Problem: If a test is developed that claims to be 90% accurate, what is the probability that an individual with a positive test result for statisticitis actually has the disease?

Solution: One good way to do this is to start with 1000 theoretical individuals. Since we know 5% of the population has the disease, 5% of the 1000, or 50, must have the disease. We can illustrate this in a chart like the ones we did earlier:

| reality | ||||

| no disease | disease | |||

| test result | negative | |||

| positive | ||||

| 950 | 50 | 1000 | ||

We're also told that the test is "90% accurate", so 90% of those 50 with the disease will have positive test results. That gives us something like the following:

| reality | ||||

| no disease | disease | |||

| test result | negative | 0.1*50 = 5 | ||

| positive | 0.9*50 = 45 | |||

| 950 | 50 | 1000 | ||

Now, we also have to consider the test being "90% accurate" and that it should return a negative result for 90% of the 950 who do not have the disease.

| reality | ||||

| no disease | disease | |||

| test result | negative | 0.9*950 = 855 | 5 | |

| positive | 0.1*950 = 95 | 45 | ||

| 950 | 50 | 1000 | ||

So in summary, we have the following table:

| reality | ||||

| no disease | disease | |||

| test result | negative | 855 | 5 | 860 |

| positive | 95 | 45 | 140 | |

| 950 | 50 | 1000 | ||

We were asked originally for the probability that an individual with a positive test actually has the disease. In other words, we want:

P(has disease | positive test) = 45/140 ≈ 0.32

What does this mean? Well, if this test is "90% accurate", then a positive test result actually only means you have the disease about 32% of the time!

On the other hand, it's interesting to note that a negative result is fairly accurate - 855/860 ≈ 0.994 = 99.4%. So you can feel pretty confident of a negative result.

This may seem unreal, but some tests really do work this way. What is done to balance this out is to treat a "positive" test result as not definitive - it simply warrants more study.

The test for gestational diabetes is a perfect example. The test is performed in two stages - an initial "screening", which is extended to a second test if the result is positive.

Stating Conclusions to Hypothesis Tests

We have to be very careful when we state our conclusions. The way this type of hypothesis testing works, we look for evidence to support the alternative claim. If we find it, we say we have enough to support the alternative. If we don't find that evidence, we say just that - we don't have enough evidence to support the alternative hypothesis.

We never support the null hypothesis. In fact, the null hypothesis is probably not exactly true. There are some very interesting discussions of this type of hypothesis testing (see links at the end of this page), and one criticism is that large enough sample will probably show a difference from the null hypothesis.

That said, we look for evidence to support the alternative hypothesis. If we don't find it, then we simply say that we don't have enough evidence to support the alternative hypothesis.

To illustrate, consider the three examples from earlier this section:

Example 8

Let's consider Example 1, introduced earlier this section. The null and alternative hypotheses were as follows:

H0: p = 0.64

H1: p > 0.64

Suppose we decide that the evidence supports the alternative claim (it does). Our conclusion would then be:

There is enough evidence to support the claim that the proportion of ECC students who were eligible to vote and did was more than the national proportion of 64% who voted in 2004.

Example 9

In Example 2, we stated null and alternative hypotheses:

H0: μ = 28

H1: μ < 28

Suppose we find an average age of 20.4, and we decide to reject the null hypothesis. Our conclusion would then be:

There is enough evidence to support our claim that the average age of online Mth120 students is less than 28 years.

Example 10

In Example 3, we stated null and alternative hypotheses:

H0: μ = 100

H1: μ ≠ 100

Suppose we find an average IQ from a sample of 30 students to be 101, which we determine is not significantly different from the assumed mean. Our conclusion would then be:

There is not enough evidence to support the claim that the average IQ of ECC students is not 100.

The Controversy Regarding Hypothesis Testing

Unlike other fields in mathematics, there are many areas in statistics which are still being debated. Hypothesis Testing is one of them. There are several concerns that any good statistician should be aware of.

Unlike other fields in mathematics, there are many areas in statistics which are still being debated. Hypothesis Testing is one of them. There are several concerns that any good statistician should be aware of.

- Tests are significantly affected by sample size

- Not rejecting the null hypothesis does not mean it is true

- Statistical significance does not imply practical significance

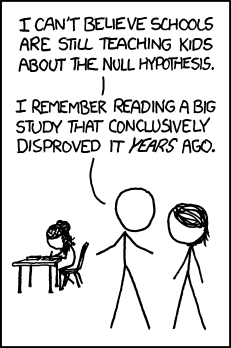

- Repeated tests can be misleading (see this illuminating comic from XKCD)

Much more subtly, the truth is likely that the null hypothesis is never true, and rejecting it is only a matter of getting a large enough sample. Consider Example 6, in which we assumed the average age was 28. Well... to how many digits? Isn't it likely that the average age is actually 27.85083 or something of the sort? In that case, all we need a large enough sample to get a sample mean that is statistically less than 28, even though the difference really has no practical meaning.

If you're interested in reading more about some of the weaknesses of this method for testing hypotheses, visit these links:

- Commentaries on Significance Testing

- The Concept of Statistical Significance Testing

- Null-Hypothesis Controversy

- The Controversy Over How to Present Research Findings

So Why Are We Studying Hypothesis Testing?

The reality is that we need some way to analytically make decisions regarding our observations. While it is simplistic and certainly contains errors, hypothesis testing still does have value, provided we understand its limitations.

This is also an introductory statistics course. There are limits to what we can learn in a single semester. There are more robust ways to perform a hypothesis test, including effect size, power analysis, and Bayesian inference. Unfortunately, many of these are simply beyond the scope of this course.

The point of this discussion is to be clear that hypothesis testing has weaknesses. By understanding them, we can make clear statements about the results of a hypothesis test and what we can actually conclude.